Classifying Animals

Hi everyone, my name is Matthew, I have been brought on to help with the machine learning side of things. I’m very excited to be part of this project.

My job here is to take all the thermal footage we have been recording and identify the animals in it.

This kind of the work has been done many, times, before, however, these all use regular cameras. The predators we are trying to identify are nocturnal, so thermal vision is a must.

Thermal has become popular recently due to falling prices of good quality cameras.

A Baseline Model - Classifying Frames Individually

It is always a good idea when starting a new project to begin with the simplest idea possible. This gives you a chance to get to know the data and provides a good baseline result to compare against for future work.

In this case, the simplest way to start is to break apart the videos and classify each frame individually.

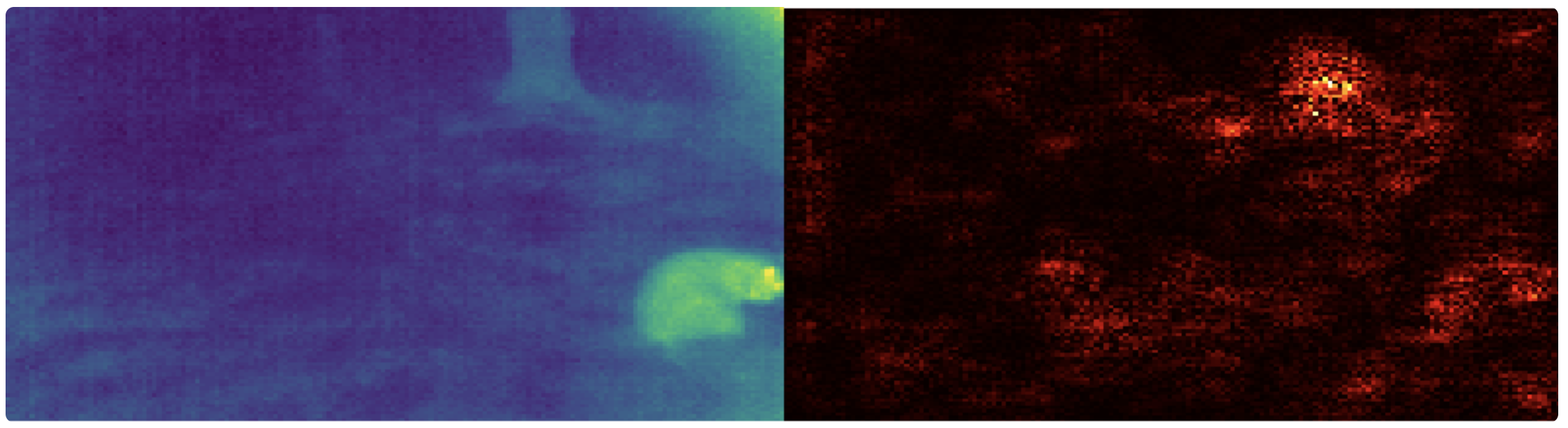

An example frame is shown below

Possum in thermal vision on left, and saliency map on right. The saliency map indicates that the tree is the primary predictor of the animal class.

This approach as 3 significant flaws

It cannot deal with multiple objects within one frame

No motion information is included

The background can be used to identify the animal.

The 3rd point is the most problematic. Some of our cameras typically pick up certain kinds of animals. So, if the algorithm can learn which camera it is looking at it can guess what kind of animal it might be.

The saliency map lets us know which parts of the image are important to the classifier, and in the above example, it is the tree, not the possum that the algorithm is paying attention to!

Removing the background

A good solution to this problem is to simply remove the background. Mammals have a body temperature of between 25 and 30 degrees Celsius, and we are filming mostly at night. This gives quite a good separation between the background and the object of interest.

A simple filtering algorithm removes almost all the background. The saliency map shows the possum is now the primary predictor.

Great! Now the algorithm now focuses primarily on the possum and less on the background. Putting together multiple frames and classifying the entire sequence results in an accuracy of around 76% using this very simple method.

This establishes a good baseline mark for future algorithms.

Identify, Track, and Classify

Above we discussed a very simple classification system and its limitations. But we can do much better than that by using an Identify, track, and classify process. The idea is based on some very good prior research. In this paper, they implement an identify, track and classify process that should work very well for our needs. A big change from their work is they used a support vector machine to classify the animals, we, however, will be using a more advanced convolutional neural network.

Identifying Objects

The first step is to try and identify ‘regions of interest’ within the video. This is a little trickier than it first sounds. Consider the following frame

It might be difficult to see, but there is a tiny bird in the centre of the frame. This is definitely not the hottest part of the image, but using some clever tricks we can identify it.

The solution is to estimate the background of the video. Then, by subtracting that background from the frame, we get a very clear separation between foreground and background objects.

You can see how well this works! Unfortunately, on moving footage, we cannot use this method and must stick to a simpler thresholding system (which works fine during night time anyway).

I then take this filtered image and try to work out which pixels are important, and which pixels belong to which object. This is a bit of a fine art, but I think I have something that works for now.

The process is as follows

Subtract the background.

Subtract an, automatically adjusted, threshold level

Binarise the frame by setting all pixels <=0 to 0, and all others to 1

Run an erosion algorithm (this removes islands of pixels)

Run a connected components algorithm (this figures out which pixels are connected to which other ones

Measure the size of the connected components, and add a 12-pixel frame

These frames are then passed on the tracker as ‘regions of interest’.

Tracking Objects

Now that we have a method to identify the regions of interest we need to track them through the frame. I use quite a simple model for this.

I record a list of tracks, each with its own velocity. Each frame I predict where the track should be (according to its velocity) and look for a region of interest there. If I find a region of a similar size and with a significant amount of overlap I assume it is the same object.

Any regions that do not get matched up are initialized as new tracks, and any tracks that do not find a match are removed.

This generates a few false positives (phantom tracks as I have been calling them), but removing short tracks, and tracks that do not move sorts most of these out.

Shown below is a video demonstrating the algorithm in action. It is able to keep track of multiple objects even with two animals in the video.

The video has 4 quadrants which are:

Top-left: frame, with regions of interest

Top-right: background subtracted frame

Bottom-left: the identified ‘important pixels’

Bottom-right: the estimated background.

Video 1: Cleaning videos for Machine Learning

Each track is saved as a small video cropped to contain just the animal along with some additional data, such as the velocity of the frame.

These tracks will then be passed onto the classifier for classification, which I hope to talk about in my next blog post :)